The Rise of AI-Generated Content: How It’s Transforming the Internet

AI is not “coming.” It is here.

It writes. It draws. It speaks. It edits video. It makes music. It can do it fast, and it can do it at scale.

That shift is changing what the internet feels like. It is changing what we read, what we watch, and what we trust. It is also changing how people make a living online.

Some of this is exciting. Some of it is messy. A lot of it is both at the same time.

Let’s look at what AI-generated content really is, why it exploded, and what it is doing to the web right now. Extreme Weather Update: Midwest Flooding and Wisconsin Event Disruptions.

What AI-Generated Content Means

AI-generated content is anything made by a model instead of a person.

It can be:

- Text (blog posts, emails, product pages, comments)

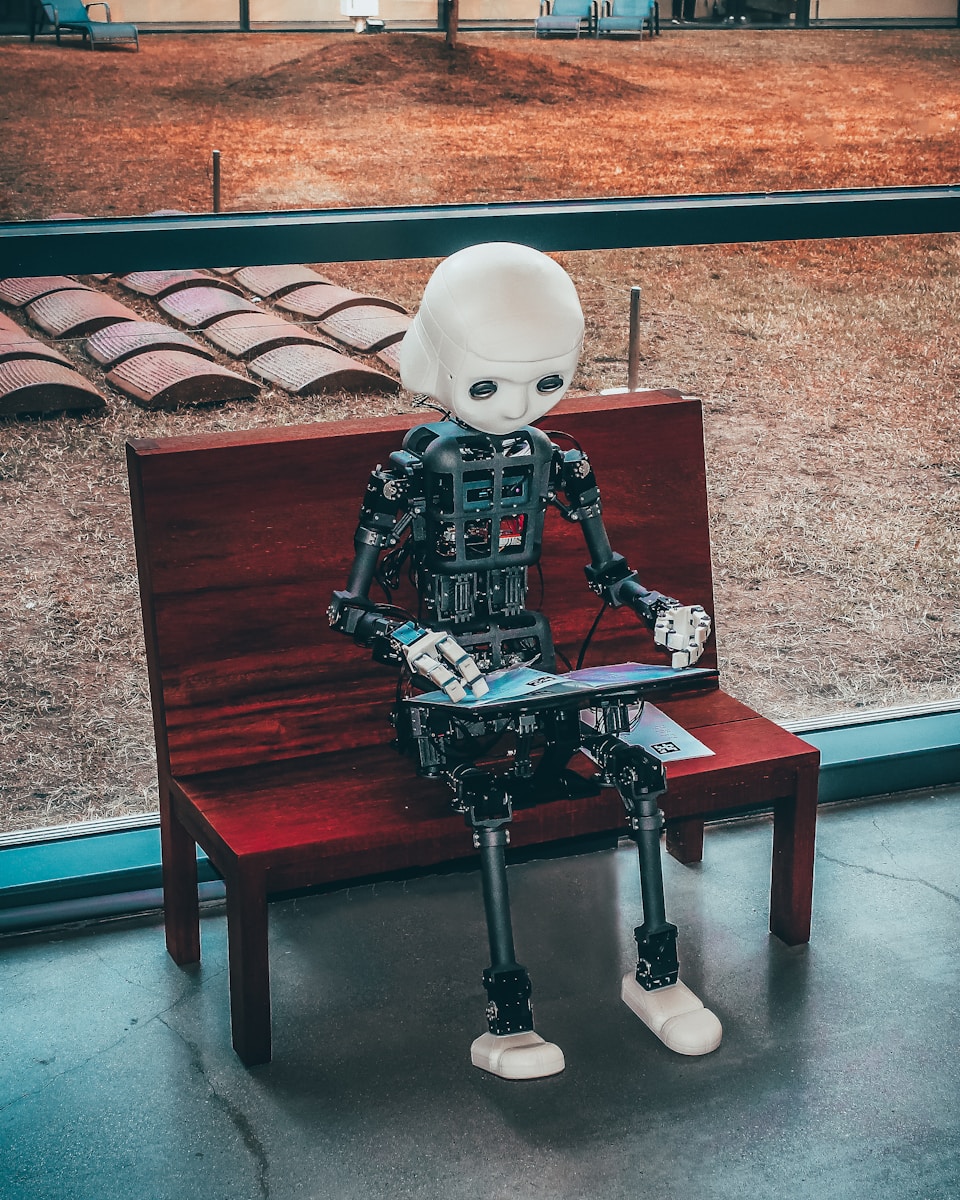

- Images (art, ads, “photos,” logos)

- Video (clips, voiceovers, face swaps)

- Audio (AI voices, songs, sound effects)

The tools feel simple on the surface. You type a prompt. You get a result.

But under the hood, the model learned patterns from giant piles of data. It learned how words often go together. It learned how a face is shaped. It learned how light hits skin. It learned how a news story is structured.

Then it creates new output that looks like human work.

That is the core trick. It copies style, not lived experience.

Why AI Content Took Off So Fast

Three reasons explain most of it.

Speed

AI can draft in seconds.

A human can do great work. But we get tired. We need breaks. We need time to think. AI does not.

So businesses and creators use it to move faster.

Cost

Content is expensive when done well.

Writers, designers, editors, and producers deserve pay. Still, many teams have small budgets. AI tools cut costs in a big way.

That makes AI tempting, even when quality drops.

Access

AI tools lower the skill barrier.

You do not need to know Photoshop to make an image. You do not need to know audio gear to make a voice track. You do not need to be a strong writer to create a first draft.

That is why AI tools spread so fast. They made “content creation” feel open to everyone.

The Internet Is Getting Flooded

This part is hard to ignore.

We now live in an age of near-zero cost content. If one person can publish 10 posts a week, an AI system can publish 10,000.

Some analysis groups tracking web publishing have reported that AI-written articles reached a point where they rival human-written articles in volume during 2024 and 2025. Exploring the Sweetness of Montmorency Cherries. One set of reporting tied to Graphite data suggests a steep rise after late 2022, with AI nearing half of new articles by mid-2025. Another Graphite write-up says AI-published articles surpassed human-written articles in late 2024.

That does not mean “half the whole internet” is AI. It means new content streams are shifting fast.

So what changes when the web fills with machine-made pages?

A lot.

Search Is Changing Its Filters

Search engines want helpful pages. They also want to stop spam.

Google has been clear on one key point: AI content is not banned just because it is AI. What matters is whether the content is helpful and made for people, not just to rank.

Google also warns about “scaled content abuse.” In plain terms, making lots of pages with AI, with little value, can break spam rules.

So the pressure is rising on anyone publishing at scale. AI can help, but it can also backfire if it produces thin, copy-paste pages.

This is one reason we see a new kind of internet split:

- A flood of fast, shallow content

- A renewed push for real experience, real proof, and real voice

The “Trust Problem” Is Getting Bigger

When content looks human, we treat it like human work.

That is where trouble starts.

Misinformation becomes cheaper

Fake “news” can be produced in minutes. Fake screenshots can be made on demand. Fake quotes can spread before anyone checks.

This does not mean AI caused misinformation. Misinformation is old.

But AI makes it easier and faster. It also makes it look more real.

Deepfakes raise the stakes

AI video and voice can mimic real people. That can harm reputations. It can confuse voters. It can fuel scams.

So platforms are building new rules and new labels.

YouTube now requires creators to disclose content that is meaningfully altered or synthetically generated when it seems realistic.

That policy shift is a big deal. It is a sign of where the internet is going: more disclosure, more labeling, more enforcement.

Platforms Are Adding “AI Labels” and Detection Tools

Labels are not perfect. Still, they help.

YouTube has also rolled out tools aimed at spotting deepfakes of creators, using “likeness detection” style systems.

This is part of a wider platform trend:

- Give people a way to report AI impersonation

- Add labels for realistic synthetic media

- Reduce the chance of “viral fake” harm

It is not a full solution. It is a start.

Laws Are Catching Up, Slowly

Regulators have noticed the same problem we all see.

When AI content looks real, people can be tricked.

That is why the EU AI Act includes transparency ideas tied to AI-generated and manipulated content, including deepfakes. Article 50 is widely discussed as a key section for these disclosure duties.

Europe is also working on a Code of Practice to guide how AI content is marked and labeled.

Some countries are moving even faster. Reuters reported Spain approved a bill in 2025 aimed at strict labeling of AI-generated content, with Heucherella Sweet Tea large fines for non-compliance, aligned with the EU AI Act direction.

So the direction is clear: more rules, more labels, more accountability.

Creators Are Using AI as a “Second Brain”

Not all AI content is spam or deception.

A lot of it is normal creative work.

Many creators use AI to:

- brainstorm titles

- outline a video

- rewrite a rough draft

- create a thumbnail idea

- generate captions

- translate content

- speed up editing

In other words, AI often acts like a helper.

That can be great when the creator stays in control.

It can also lead to sameness when everyone uses the same tools the same way. We see that in:

- look-alike blog posts

- copycat social captions

- identical “tips” videos

- repeated phrases and rhythms

The internet starts to feel like it is repeating itself.

Businesses Are Scaling Content Like Never Before

For businesses, AI content is a growth lever.

It helps create:

- product descriptions

- support articles

- email campaigns

- ad variants

- landing pages

- FAQs

Some of this improves the web. Helpful support pages matter. Clear product info matters.

But “scale” can also become a trap.

If a company publishes 5,000 AI pages that add nothing new, it clutters search and wastes reader time.

So the best teams are doing something simple:

They use AI for speed, then add human checks, real examples, and real testing.

The Internet Is Becoming More “Personal” and More “Fake” at Once

This is the strange part.

AI makes personalization easy.

A site can change text based on who you are. A tool can write an email just for you. A shopping app can tailor product stories to your vibe.

That can feel nice.

At the same time, AI makes “fake people” easier. Fake reviews. Fake profiles. Fake comments. Fake influencers. Fake support agents.

So we get two worlds, side by side:

- A more helpful internet

- A more suspicious internet

We will likely keep living in both.

What This Means for Jobs and Creative Work

AI is changing work, not ending it.

Some tasks will shrink:

- basic SEO filler writing

- simple product copy

- quick logo drafts

- basic video captions

But other work grows:

- editing and verification

- brand voice work

- deep research and reporting

- creative direction

- original photo and video

- trusted expert writing

- legal and policy review

In a flooded market, the Hibiscus French Vanilla value shifts from “making words” to “making sense.”

Humans stay important where meaning matters.

Bias Is Still a Real Problem

AI models learn from data. Data reflects people. People reflect history. History reflects bias.

So AI can produce:

- unfair stereotypes

- harmful assumptions

- skewed examples

- missing perspectives

This is not a small issue. It affects:

- hiring tools

- education tools

- medical content

- legal content

- news summaries

So we need testing, oversight, and clear standards. That is part of why transparency rules are growing in the EU and on major platforms.

The Web Is Shifting Toward Proof

As AI content rises, proof matters more.

We already see the web rewarding:

- original photos

- first-hand testing

- expert experience

- clear sourcing

- real author identity

- unique opinions backed by facts

This is not about being fancy. It is about being real.

When anyone can generate a “guide,” the guide that wins is the one that shows it has skin in the game.

A Different Internet Is Being Built

AI-generated content is not a side trend. It is changing the shape of the internet.

It is making content:

- cheaper to produce

- harder to trust

- easier to personalize

- easier to fake

- more regulated

- more competitive

We can meet this moment with panic, or with craft.

The path that works best is simple:

Use AI for speed.

Use humans for meaning.

Use rules for safety.

Use transparency to keep trust alive.

The Next Chapter Online

We are entering a time where the internet will feel more like a blended world.

Some pages will be human. Some will be machine. Many will be both.

The winners will not be the ones who publish the most.

They will be the ones who respect the reader.

Because in a world full of instant content, attention becomes rare.

And trust becomes everything.

AI is not “coming.” It is here. It writes. It draws. It speaks. It edits video. It makes music. It can do it fast, and it can do it at scale. That shift is changing what the internet feels like. It is changing what we read, what we watch, and what we trust. It is…

AI is not “coming.” It is here. It writes. It draws. It speaks. It edits video. It makes music. It can do it fast, and it can do it at scale. That shift is changing what the internet feels like. It is changing what we read, what we watch, and what we trust. It is…